Running and Quitting

Overview

Teaching: 15 min

Exercises: 0 minQuestions

How can I run Python programs?

Objectives

Launch the JupyterLab server.

Create a Jupyter notebook.

Shutdown the JupyterLab server.

Create and run Python cells in a notebook.

Getting the Data

The data we will be using is taken from the

gapminder dataset.

To obtain it, download and unzip the file

python-novice-gapminder-data.zip.

In order to follow the presented material, you should put the resulting data directory/folder

in your home folder,

and you should launch the JupyterLab

server in the same directory that you put the data directory

(see Starting JupyterLab).

Why JupyterLab

There are many ways to write and run Python code, such as using a text editor to create and edit a file with Python code in it then running the file at the commandline (show file containing Python code). Another way is to run Python in the terminal (show launching interpreter). While these are common approach, we are going to use the Jupyter Notebook via JupyterLab for the remainder of this workshop.

This has several advantages:

- You can easily type, edit, and copy and paste blocks of code.

- Tab complete allows you to easily access the names of things you are using and learn more about them.

- It allows you to annotate your code with links, different sized text, bullets, etc. to make it more accessible to you and your collaborators.

- It allows you to display figures next to the code that produces them to tell a complete story of the analysis.

Each notebook contains one or more cells that contain code, text, or images.

Getting Started with JupyterLab

JupyterLab is an application with a user interface you use in your browser. It enables us to work with Jupyter notebooks, text files, and terminals in an integrated manner.

JupyterLab is included as part of the Anaconda Python distribution. If you have not already installed the Anaconda Python distribution, see the setup instructions for installation instructions.

Starting JupyterLab

You can start the JupyterLab server through the command line or through an application called

Anaconda Navigator. Anaconda Navigator is included as part of the Anaconda Python distribution.

macOS - Command Line

To start the JupyterLab server you will need to access the command line through the Terminal. There are two ways to open Terminal on Mac.

- In your Applications folder, open Utilities and double-click on Terminal

- Press Command + spacebar to launch Spotlight. Type

Terminaland then double-click the search result or hit Enter

After you have launched Terminal, type the command to launch the JupyterLab server.

$ jupyter lab

Windows Users - Command Line

To start the JupyterLab server you will need to access the Anaconda Prompt.

Press Windows Logo Key and search for Anaconda Prompt, click the result or press enter.

After you have launched the Anaconda Prompt, type the command:

$ jupyter lab

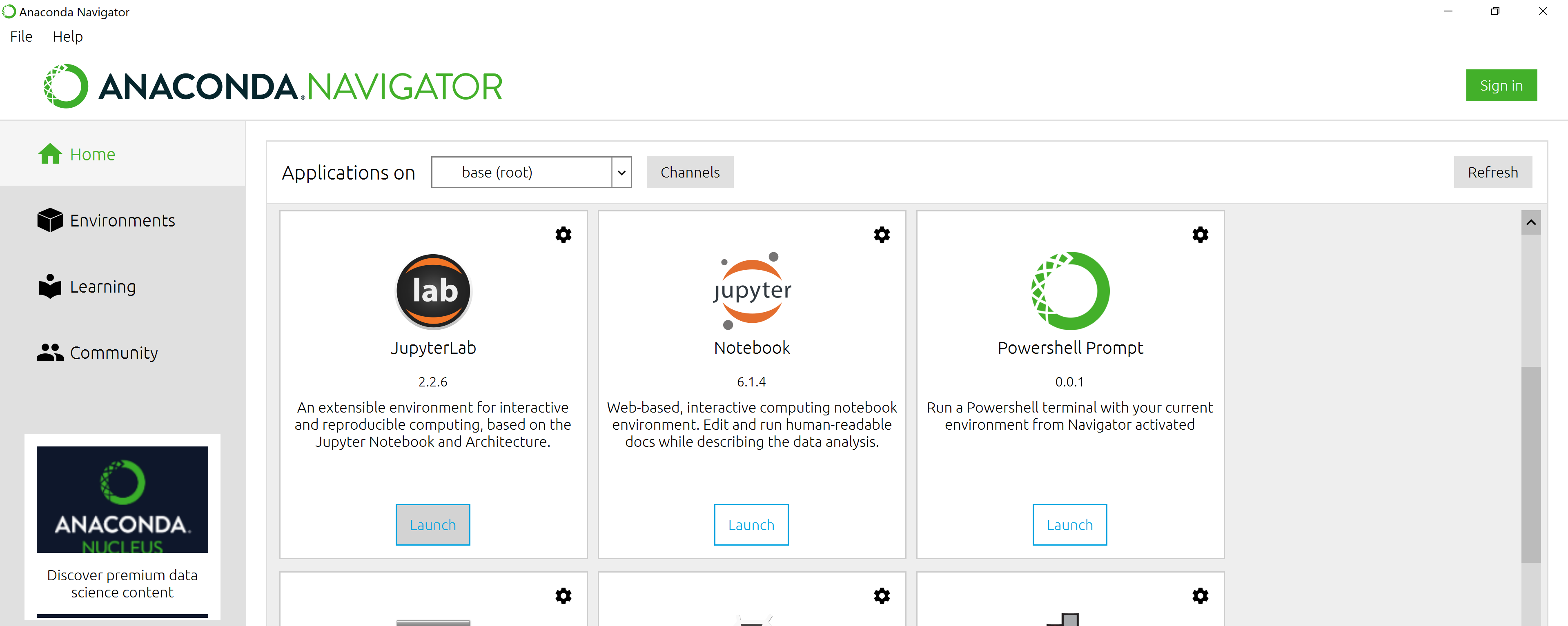

Anaconda Navigator

To start a JupyterLab server from Anaconda Navigator you must first start Anaconda Navigator (click for detailed instructions on macOS, Windows, and Linux). You can search for Anaconda Navigator via Spotlight on macOS (Command + spacebar), the Windows search function (Windows Logo Key) or opening a terminal shell and executing the anaconda-navigator executable from the command line.

After you have launched Anaconda Navigator, click the Launch button under JupyterLab. You may need

to scroll down to find it.

Here is a screenshot of an Anaconda Navigator page similar to the one that should open on either macOS or Windows.

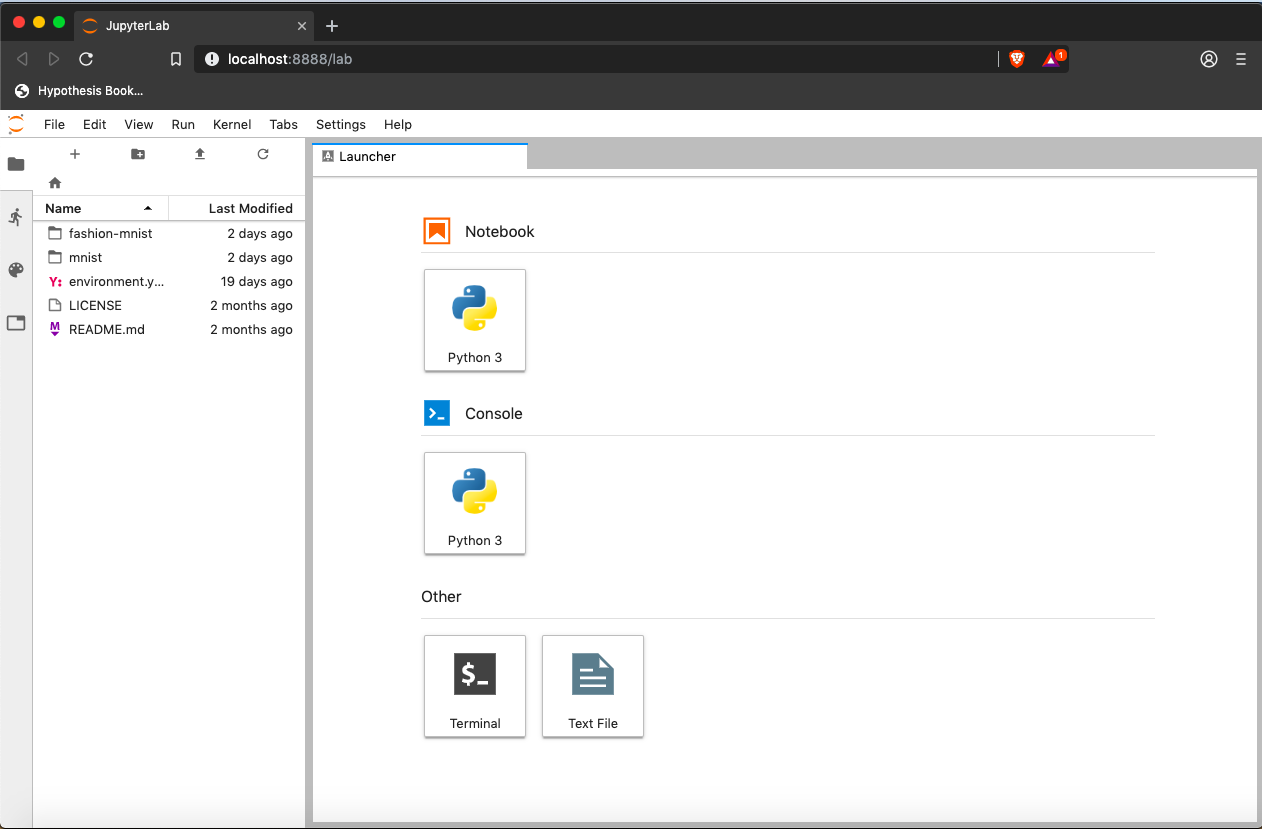

And here is a screenshot of a JupyterLab landing page that should be similar to the one that opens in your default web browser after starting the JupyterLab server on either macOS or Windows.

The JupyterLab Interface

The JupyterLab Interface consists of the Menu Bar, a collapsable Left Side Bar, and the Main Work Area which contains tabs of documents and activities.

Menu Bar

The Menu Bar at the top of JupyterLab has the top-level menus that expose various actions available in JupyterLab along with their keyboard shortcuts (where applicable). The following menus are included by default.

- File: Actions related to files and directories such as New, Open, Close, Save, etc. The File menu also includes the Shut Down action used to shutdown the JupyterLab server.

- Edit: Actions related to editing documents and other activities such as Undo, Cut, Copy, Paste, etc.

- View: Actions that alter the appearance of JupyterLab.

- Run: Actions for running code in different activities such as notebooks and code consoles (discussed below).

- Kernel: Actions for managing kernels. Kernels in Jupyter will be explained in more detail below.

- Tabs: A list of the open documents and activities in the main work area.

- Settings: Common JupyterLab settings can be configured using this menu. There is also an Advanced Settings Editor option in the dropdown menu that provides more fine-grained control of JupyterLab settings and configuration options.

- Help: A list of JupyterLab and kernel help links.

Kernels

The JupyterLab docs define kernels as “separate processes started by the server that run your code in different programming languages and environments.” When we open a Jupyter Notebook, that starts a kernel - a process - that is going to run the code. In this lesson, we’ll be using the Jupyter ipython kernel which lets us run Python 3 code interactively.

Using other Jupyter kernels for other programming languages would let us write and execute code in other programming languages in the same JupyterLab interface, like R, Java, Julia, Ruby, JavaScript, Fortran, etc.

A screenshot of the default Menu Bar is provided below.

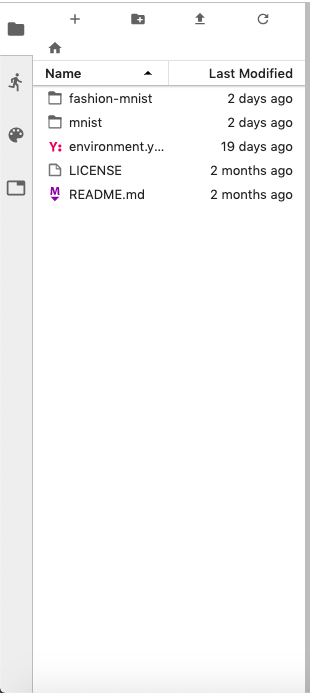

Left Sidebar

The left sidebar contains a number of commonly used tabs, such as a file browser (showing the contents of the directory where the JupyterLab server was launched). If you don’t see the data/ folder that you downloaded for the workshop, move it there now. You can hover your mouse over the folder path icon to see where to put the data/ folder.

The sidebar also includes a list of running kernels and terminals, the command palette, and a list of open tabs in the main work area. A screenshot of the default Left Side Bar is provided below.

The left sidebar can be collapsed or expanded by selecting “Show Left Sidebar” in the View menu or by clicking on the active sidebar tab.

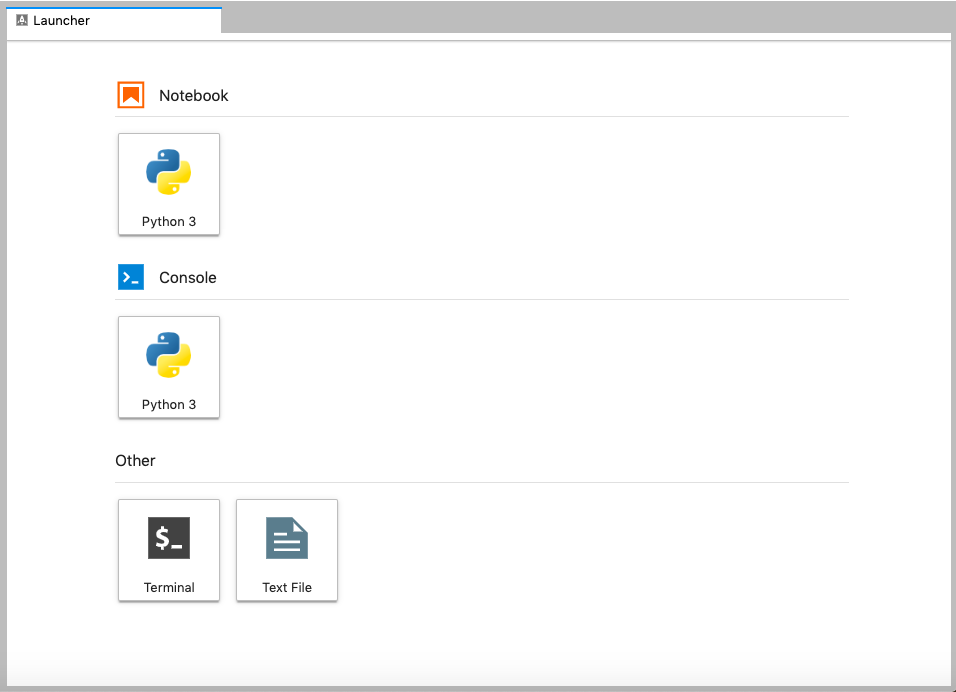

Main Work Area

The main work area in JupyterLab enables you to arrange documents (notebooks, text files, etc.) and other activities (terminals, code consoles, etc.) into panels of tabs that can be resized or subdivided. A screenshot of the default Main Work Area is provided below.

Drag a tab to the center of a tab panel to move the tab to the panel. Subdivide a tab panel by dragging a tab to the left, right, top, or bottom of the panel. The work area has a single current activity. The tab for the current activity is marked with a colored top border (blue by default).

Creating a Jupyter Notebook

To open a new notebook click the Python 3 icon under the Notebook header in the Launcher tab in the main work area. You can also create a new notebook by selecting New -> Notebook from the File menu in the Menu Bar.

Additional notes on Jupyter notebooks.

- Notebook files have the extension

.ipynbto distinguish them from plain-text Python programs. - Notebooks can be exported as Python scripts that can be run from the command line.

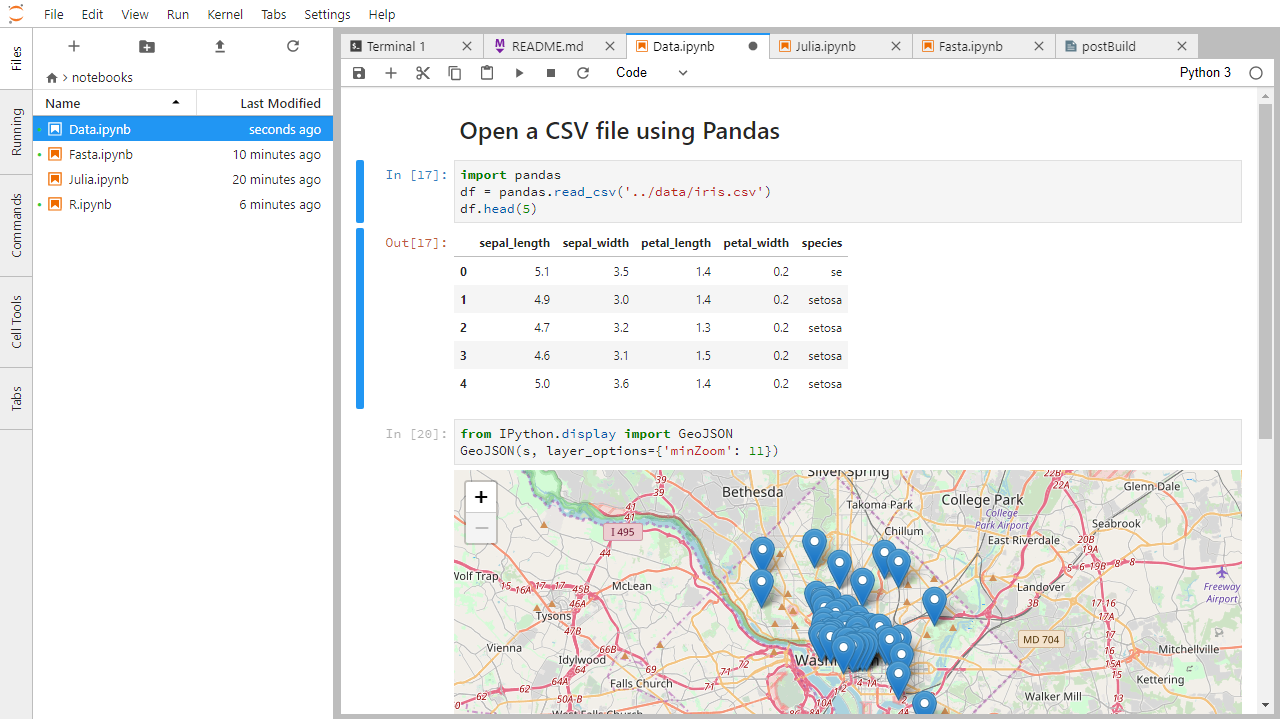

Below is a screenshot of a Jupyter notebook running inside JupyterLab. If you are interested in more details, then see the official notebook documentation.

How It’s Stored

- The notebook file is stored in a format called JSON.

- Just like a webpage, what’s saved looks different from what you see in your browser.

- But this format allows Jupyter to mix source code, text, and images, all in one file.

Code vs. Text

Jupyter mixes code and text in different types of blocks, called cells. We often use the term “code” to mean “the source code of software written in a language such as Python”. A “code cell” in a Notebook is a cell that contains software; a “text cell” is one that contains ordinary prose written for human beings.

The Notebook has Command and Edit modes.

- If you press Esc and Return alternately, the outer border of your code cell will change from gray to blue.

- These are the Command (gray) and Edit (blue) modes of your notebook.

- Command mode allows you to edit notebook-level features, and Edit mode changes the content of cells.

- When in Command mode (esc/gray),

- The b key will make a new cell below the currently selected cell.

- The a key will make one above.

- The x key will delete the current cell.

- The z key will undo your last cell operation (which could be a deletion, creation, etc).

- All actions can be done using the menus, but there are lots of keyboard shortcuts to speed things up.

More Math

What is displayed when a Python cell in a notebook that contains several calculations is executed? For example, what happens when this cell is executed?

7 * 3 2 + 1Solution

Python returns the output of the last calculation.

3

Closing JupyterLab

- From the Menu Bar select the “File” menu and then choose “Shut Down” at the bottom of the dropdown menu. You will be prompted to confirm that you wish to shutdown the JupyterLab server (don’t forget to save your work!). Click “Shut Down” to shutdown the JupyterLab server.

- To restart the JupyterLab server you will need to re-run the following command from a shell.

$ jupyter lab

Closing JupyterLab

Practice closing and restarting the JupyterLab server.

Key Points

Use the Jupyter Notebook for editing and running Python.

The Notebook has Command and Edit modes.

Use the keyboard and mouse to select and edit cells.

Variables and Assignment

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How can I store data in programs?

Objectives

Write programs that assign scalar values to variables and perform calculations with those values.

Correctly trace value changes in programs that use scalar assignment.

Use variables to store values.

- Variables are names for values.

- In Python the

=symbol assigns the value on the right to the name on the left. - The variable is created when a value is assigned to it.

-

Here, Python assigns an age to a variable

ageand a name in quotes to a variablefirst_name.age = 42 first_name = 'Ahmed' - Variable names

- can only contain letters, digits, and underscore

_(typically used to separate words in long variable names) - cannot start with a digit

- are case sensitive (age, Age and AGE are three different variables)

- There are conventions for using upper-case letters at the start of variable names so we will use lower-case letters for now.

- can only contain letters, digits, and underscore

- Variable names that start with underscores like

__alistairs_real_agehave a special meaning so we won’t do that until we understand the convention.

Use meaningful variable names.

- Python doesn’t care what you call variables as long as they obey the rules (alphanumeric characters and the underscore).

flabadab = 42

- Use meaningful variable names to help other people understand what the program does.

- The most important “other person” is your future self.

Use comments to add documentation to programs.

- If you want to add information to explain what your code is doing, you can use the

#to indicate a comment.# This sentence isn't executed by Python. flabadab = 42 # current age - anything after '#' is ignored.

- While using meaningful variable names helps avoid needing to explain with comments, if it is difficult to come up with a relatively short relevant variable name, this can help.

Use print to display values.

- Python has a built-in function called

printthat prints things as text. - Call the function (i.e., tell Python to run it) by using its name.

- Provide values to the function (i.e., the things to print) in parentheses.

- To add a string to the printout, wrap the string in single or double quotes.

- The values passed to the function are called arguments

print(first_name, 'is', age, 'years old')

Ahmed is 42 years old

printautomatically puts a single space between items to separate them.- And wraps around to a new line at the end.

Variables must be created before they are used.

- If a variable doesn’t exist yet, or if the name has been mis-spelled, Python reports an error. (Unlike some languages, which “guess” a default value.)

print(last_name)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-1-c1fbb4e96102> in <module>()

----> 1 print(last_name)

NameError: name 'last_name' is not defined

- The last line of an error message is usually the most informative.

Syntax errors are another common error seen.

- Python reports a syntax error when it can’t understand the source of a program.

- Won’t even try to run the program if it can’t be parsed.

# Forgetting an end quote causes a syntax error.

last_name = 'Feng

File "<ipython-input-56-f42768451d55>", line 2

name = 'Feng

^

SyntaxError: EOL while scanning string literal

- Look more closely at the error message to see what went wrong.

Variables Persist Between Cells

Be aware that it is the order of execution of cells that is important in a Jupyter notebook, not the order in which they appear. Python will remember all the code that was run previously, including any variables you have defined, irrespective of the order in the notebook. Therefore if you define variables lower down the notebook and then (re)run cells further up, those defined further down will still be present. As an example, create two cells with the following content, in this order:

print(myval)myval = 1If you execute this in order, the first cell will give an error. However, if you run the first cell after the second cell it will print out

1.

Variables can be used in calculations.

- We can use variables in calculations just as if they were values.

- Remember, we assigned the value

42toagea few lines ago.

- Remember, we assigned the value

age = age + 3

print('Age in three years:', age)

Age in three years: 45

Predicting Values

What is the final value of

positionin the program below? (Try to predict the value without running the program, then check your prediction.)initial = 'left' position = initial initial = 'right'Solution

print(position)leftThe

initialvariable is assigned the value'left'. In the second line, thepositionvariable also receives the string value'left'. In third line, theinitialvariable is given the value'right', but thepositionvariable retains its string value of'left'.

Variables only change value when something is assigned to them.

- If we make one cell in a spreadsheet depend on another, and update the latter, the former updates automatically.

- This does not happen in programming languages.

variable_one = 1

variable_two = 5 * variable_one

variable_one = 2

print('first is', variable_one, 'and second is', variable_two)

first is 2 and second is 5

- The computer reads the value of

firstwhen doing the multiplication, creates a new value, and assigns it tosecond. - After that,

seconddoes not remember where it came from.

Key Points

Use variables to store values.

Use

Variables persist between cells.

Variables must be created before they are used.

Variables can be used in calculations.

Python is case-sensitive.

Use meaningful variable names.

Data Types and Type Conversion

Overview

Teaching: 10 min

Exercises: 10 minQuestions

What kinds of data do programs store?

How can I convert one type to another?

Objectives

Explain key differences between integers and floating point numbers.

Explain key differences between numbers and character strings.

Use built-in functions to convert between integers, floating point numbers, and strings.

Every value has a type.

- Every value in a program has a specific type.

- Integer (

int): represents positive or negative whole numbers like 3 or -512. - Floating point number (

float): represents real numbers like 3.14159 or -2.5. - Character string (usually called “string”,

str): text.- Written in either single quotes or double quotes (as long as they match).

- The quote marks aren’t printed when the string is displayed.

Use the built-in function type to find the type of a value.

- Use the built-in function

typeto find out what type a value has. - Works on variables as well.

- But remember: the value has the type — the variable is just a label.

print(type(52))

<class 'int'>

fitness = 'average'

print(type(fitness))

<class 'str'>

Types control what operations (or methods) can be performed on a given value.

- A value’s type determines what the program can do to it.

print(5 - 3)

2

print('hello' - 'h')

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-2-67f5626a1e07> in <module>()

----> 1 print('hello' - 'h')

TypeError: unsupported operand type(s) for -: 'str' and 'str'

You can use the “+” operator on strings.

- “Adding” character strings concatenates them.

full_name = 'Ahmed' + ' ' + 'Walsh'

print(full_name)

Ahmed Walsh

Use the built-in function len to find the length of a string.

print(len('helium'))

6

- Nested functions are evaluated from the inside out, like in mathematics.

Strings have a length (but numbers don’t).

- We saw earlier that the built-in function

lencounts the number of characters in a string (‘helium’ has length 6). - But numbers don’t have a length (not even zero).

print(len(52))

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-3-f769e8e8097d> in <module>()

----> 1 print(len(52))

TypeError: object of type 'int' has no len()

Must convert numbers to strings or vice versa when operating on them.

- Cannot add numbers and strings.

print(1 + '2')

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-4-fe4f54a023c6> in <module>()

----> 1 print(1 + '2')

TypeError: unsupported operand type(s) for +: 'int' and 'str'

- Not allowed because it’s ambiguous: should

1 + '2'be3or'12'? - Some types can be converted to other types by using the type name as a function.

print(1 + int('2'))

print(str(1) + '2')

3

12

Can mix integers and floats freely in operations.

- Integers and floating-point numbers can be mixed in arithmetic.

- Python 3 automatically converts integers to floats as needed.

- The result of the following calculation with an

intandfloatis afloat.

print('three squared is', 3.0 ** 2)

three squared is 9.0

Choose a Type

What type of value (integer, floating point number, or character string) would you use to represent each of the following? Try to come up with more than one good answer for each problem. For example, in # 1, when would counting days with a floating point variable make more sense than using an integer?

- Number of days since the start of the year.

- Time elapsed from the start of the year until now in days.

- Serial number of a piece of lab equipment.

- A lab specimen’s age

- Current population of a city.

- Average population of a city over time.

Solution

The answers to the questions are:

- Integer, since the number of days would lie between 1 and 365.

- Floating point, since fractional days are required

- Character string if serial number contains letters and numbers, otherwise integer if the serial number consists only of numerals

- This will vary! How do you define a specimen’s age? whole days since collection (integer)? date and time (string)?

- Choose floating point to represent population as large aggregates (eg millions), or integer to represent population in units of individuals.

- Floating point number, since an average is likely to have a fractional part.

Key Points

Every value has a type.

Use the built-in function

typeto find the type of a value.Types control what operations can be done on values.

Strings can be added.

Strings have a length (but numbers don’t).

Must convert numbers to strings or vice versa when operating on them.

Can mix integers and floats freely in operations.

Variables only change value when something is assigned to them.

Built-in Functions and Help

Overview

Teaching: 15 min

Exercises: 10 minQuestions

How can I find out what built-in functions do?

Objectives

Use help to display documentation for built-in functions.

A function may take zero or more arguments.

- We have seen some functions already (

print,len,type,int,str) — now let’s take a closer look. - An argument is a value passed into a function.

lentakes exactly one.int,str, andfloatcreate a new value from an existing one.printtakes zero or more.printwith no arguments prints a blank line.

Commonly-used built-in function round and its arguments.

roundwill round off a floating-point or integer number.- Functions may have default values for some arguments.

- By default, rounds to zero decimal places.

round(3.712)

4

- We can specify the number of decimal places we want.

round(3.712, 1)

3.7

Argument’s types matter.

round(3.712, 1.5)

TypeError Traceback (most recent call last)

<ipython-input-43-95f04cdcdc64> in <module>

----> 1 round(3.712, 1.5)

TypeError: 'float' object cannot be interpreted as an integer

Use the built-in function help to get help for a function.

- Every built-in function has online documentation.

help(round)

Help on built-in function round in module builtins:

round(number, ndigits=None)

Round a number to a given precision in decimal digits.

The return value is an integer if ndigits is omitted or None. Otherwise

the return value has the same type as the number. ndigits may be negative.

The Jupyter Notebook has two ways to get help.

- Option 1: Place the cursor near where the function is invoked in a cell

(i.e., the function name or its parameters),

- Hold down Shift, and press Tab.

- Do this several times to expand the information returned.

- Option 2: Type the function name in a cell with a question mark after it. Then run the cell.

Explore the Python docs!

The official Python documentation is arguably the most complete source of information about the language. It is available in different languages and contains a lot of useful resources. The Built-in Functions page contains a catalogue of all of these functions, including the ones that we’ve covered in this lesson. Some of these are more advanced and unnecessary at the moment, but others are very simple and useful.

Key Points

Functions may only work for certain (combinations of) arguments.

Functions may have default values for some arguments.

Use the built-in function

helpto get help for a function.The Jupyter Notebook has two ways to get help.

Morning Coffee

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Objectives

Reflection exercise

Over coffee, reflect on and discuss the following:

- What are the different kinds of errors Python will report?

- Did the code always produce the results you expected? If not, why?

- Is there something we can do to prevent errors when we write code?

Key Points

Libraries

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How can I use software that other people have written?

How can I find out what that software does?

Objectives

Explain what software libraries are and why programmers create and use them.

Write programs that import and use modules from Python’s standard library.

Find and read documentation for the standard library interactively (in the interpreter) and online.

Most of the power of a programming language is in its libraries.

- A library is a collection of files (called modules) that contains

functions for use by other programs.

- May also contain data values (e.g., numerical constants) and other things.

- Library’s contents are supposed to be related, but there’s no way to enforce that.

- The Python standard library is an extensive suite of modules that comes with Python itself.

- Many additional libraries are available from PyPI (the Python Package Index).

- We will see later how to write new libraries.

A program must import a library module before using it.

- Use

importto load a library module into a program’s memory. - Then refer to things from the module as

module_name.thing_name.- Python uses

.to mean “part of”.

- Python uses

- Using

math, one of the modules in the standard library:

import math

print('pi is', math.pi)

print('cos(pi) is', math.cos(math.pi))

pi is 3.141592653589793

cos(pi) is -1.0

- Have to refer to each item with the module’s name.

math.cos(pi)won’t work.

Use help to learn about the contents of a library module.

- Works just like help for a function.

- Must first import the library.

help(math)

Help on module math:

NAME

math

MODULE REFERENCE

http://docs.python.org/3/library/math

The following documentation is automatically generated from the Python

source files. It may be incomplete, incorrect or include features that

are considered implementation detail and may vary between Python

implementations. When in doubt, consult the module reference at the

location listed above.

DESCRIPTION

This module is always available. It provides access to the

mathematical functions defined by the C standard.

FUNCTIONS

acos(x, /)

Return the arc cosine (measured in radians) of x.

⋮ ⋮ ⋮

Key Points

Most of the power of a programming language is in its libraries.

A program must import a library module in order to use it.

Use

helpto learn about the contents of a library module.

Reading Tabular Data into DataFrames

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How can I read tabular data?

Objectives

Import the Pandas library.

Use Pandas to load a simple CSV data set.

Get some basic information about a Pandas DataFrame.

Use the Pandas library to do statistics on tabular data.

- Pandas is a widely-used Python library for statistics, particularly on tabular data.

- Not in the standard library, but is already installed with the Anaconda version of Python.

- Borrows many features from R’s dataframes.

- A 2-dimensional table whose columns have names and potentially have different data types.

Create an alias for a library module when importing it to shorten programs.

- Use

import ... as ...to give a library a short alias while importing it. - Load pandas with

import pandas as pd. The alias pd is commonly used for Pandas.

import pandas as pd

- Then refer to items in the library using that shortened name.

- Commonly used for libraries that are frequently used or have long names.

- E.g., the

matplotlibplotting library is often aliased asmpl.

- E.g., the

- But can make programs harder to understand, since readers must learn your program’s aliases.

Read a Comma Separated Values (CSV) data file with pd.read_csv.

- Argument is the name of the file to be read.

- Assign result to a variable to store the data that was read.

data = pd.read_csv('data/gapminder_gdp_oceania.csv')

print(data)

country gdpPercap_1952 gdpPercap_1957 gdpPercap_1962 \

0 Australia 10039.59564 10949.64959 12217.22686

1 New Zealand 10556.57566 12247.39532 13175.67800

gdpPercap_1967 gdpPercap_1972 gdpPercap_1977 gdpPercap_1982 \

0 14526.12465 16788.62948 18334.19751 19477.00928

1 14463.91893 16046.03728 16233.71770 17632.41040

gdpPercap_1987 gdpPercap_1992 gdpPercap_1997 gdpPercap_2002 \

0 21888.88903 23424.76683 26997.93657 30687.75473

1 19007.19129 18363.32494 21050.41377 23189.80135

gdpPercap_2007

0 34435.36744

1 25185.00911

- The columns in a dataframe are the observed variables, and the rows are the observations.

- Pandas uses backslash

\to show wrapped lines when output is too wide to fit the screen.

File Not Found

Our lessons store their data files in a

datasub-directory, which is why the path to the file isdata/gapminder_gdp_oceania.csv. If you forget to includedata/, or if you include it but your copy of the file is somewhere else, you will get a runtime error that ends with a line like this:FileNotFoundError: [Errno 2] No such file or directory: 'data/gapminder_gdp_oceania.csv'

Use index_col to specify that a column’s values should be used as row headings.

- Row headings are numbers (0 and 1 in this case).

- Really want to index by country.

- Pass the name of the column to

read_csvas itsindex_colparameter to do this.

data = pd.read_csv('data/gapminder_gdp_oceania.csv', index_col='country')

print(data)

gdpPercap_1952 gdpPercap_1957 gdpPercap_1962 gdpPercap_1967 \

country

Australia 10039.59564 10949.64959 12217.22686 14526.12465

New Zealand 10556.57566 12247.39532 13175.67800 14463.91893

gdpPercap_1972 gdpPercap_1977 gdpPercap_1982 gdpPercap_1987 \

country

Australia 16788.62948 18334.19751 19477.00928 21888.88903

New Zealand 16046.03728 16233.71770 17632.41040 19007.19129

gdpPercap_1992 gdpPercap_1997 gdpPercap_2002 gdpPercap_2007

country

Australia 23424.76683 26997.93657 30687.75473 34435.36744

New Zealand 18363.32494 21050.41377 23189.80135 25185.00911

Use the DataFrame.info() method to find out more about a dataframe.

data.info()

<class 'pandas.core.frame.DataFrame'>

Index: 2 entries, Australia to New Zealand

Data columns (total 12 columns):

gdpPercap_1952 2 non-null float64

gdpPercap_1957 2 non-null float64

gdpPercap_1962 2 non-null float64

gdpPercap_1967 2 non-null float64

gdpPercap_1972 2 non-null float64

gdpPercap_1977 2 non-null float64

gdpPercap_1982 2 non-null float64

gdpPercap_1987 2 non-null float64

gdpPercap_1992 2 non-null float64

gdpPercap_1997 2 non-null float64

gdpPercap_2002 2 non-null float64

gdpPercap_2007 2 non-null float64

dtypes: float64(12)

memory usage: 208.0+ bytes

- This is a

DataFrame - Two rows named

'Australia'and'New Zealand' - Twelve columns, each of which has two actual 64-bit floating point values.

- We will talk later about null values, which are used to represent missing observations.

- Uses 208 bytes of memory.

Functions attached to objects are called methods

- We created the object named

data, and because it is aDataFrametype of object, it has a method (or function) that gives us information about the dataframe. - Methods have parentheses like functions, but come after the variable.

The DataFrame.columns variable stores information about the dataframe’s columns.

- Note that this is data, not a method. (It doesn’t have parentheses.)

- Like

math.pi. - So do not use

()to try to call it.

- Like

- Called a member variable, or just member.

print(data.columns)

Index(['gdpPercap_1952', 'gdpPercap_1957', 'gdpPercap_1962', 'gdpPercap_1967',

'gdpPercap_1972', 'gdpPercap_1977', 'gdpPercap_1982', 'gdpPercap_1987',

'gdpPercap_1992', 'gdpPercap_1997', 'gdpPercap_2002', 'gdpPercap_2007'],

dtype='object')

Use DataFrame.T to transpose a dataframe.

- Sometimes want to treat columns as rows and vice versa.

- Transpose (written

.T) doesn’t copy the data, just changes the program’s view of it. - Like

columns, it is a member variable.

print(data.T)

country Australia New Zealand

gdpPercap_1952 10039.59564 10556.57566

gdpPercap_1957 10949.64959 12247.39532

gdpPercap_1962 12217.22686 13175.67800

gdpPercap_1967 14526.12465 14463.91893

gdpPercap_1972 16788.62948 16046.03728

gdpPercap_1977 18334.19751 16233.71770

gdpPercap_1982 19477.00928 17632.41040

gdpPercap_1987 21888.88903 19007.19129

gdpPercap_1992 23424.76683 18363.32494

gdpPercap_1997 26997.93657 21050.41377

gdpPercap_2002 30687.75473 23189.80135

gdpPercap_2007 34435.36744 25185.00911

Use DataFrame.describe() to get summary statistics about data.

DataFrame.describe() gets the summary statistics of only the columns that have numerical data.

All other columns are ignored, unless you use the argument include='all'.

print(data.describe())

gdpPercap_1952 gdpPercap_1957 gdpPercap_1962 gdpPercap_1967 \

count 2.000000 2.000000 2.000000 2.000000

mean 10298.085650 11598.522455 12696.452430 14495.021790

std 365.560078 917.644806 677.727301 43.986086

min 10039.595640 10949.649590 12217.226860 14463.918930

25% 10168.840645 11274.086022 12456.839645 14479.470360

50% 10298.085650 11598.522455 12696.452430 14495.021790

75% 10427.330655 11922.958888 12936.065215 14510.573220

max 10556.575660 12247.395320 13175.678000 14526.124650

gdpPercap_1972 gdpPercap_1977 gdpPercap_1982 gdpPercap_1987 \

count 2.00000 2.000000 2.000000 2.000000

mean 16417.33338 17283.957605 18554.709840 20448.040160

std 525.09198 1485.263517 1304.328377 2037.668013

min 16046.03728 16233.717700 17632.410400 19007.191290

25% 16231.68533 16758.837652 18093.560120 19727.615725

50% 16417.33338 17283.957605 18554.709840 20448.040160

75% 16602.98143 17809.077557 19015.859560 21168.464595

max 16788.62948 18334.197510 19477.009280 21888.889030

gdpPercap_1992 gdpPercap_1997 gdpPercap_2002 gdpPercap_2007

count 2.000000 2.000000 2.000000 2.000000

mean 20894.045885 24024.175170 26938.778040 29810.188275

std 3578.979883 4205.533703 5301.853680 6540.991104

min 18363.324940 21050.413770 23189.801350 25185.009110

25% 19628.685413 22537.294470 25064.289695 27497.598692

50% 20894.045885 24024.175170 26938.778040 29810.188275

75% 22159.406358 25511.055870 28813.266385 32122.777857

max 23424.766830 26997.936570 30687.754730 34435.367440

- Not particularly useful with just two records, but very helpful when there are thousands.

Reading Other Data

Read the data in

gapminder_gdp_americas.csv(which should be in the same directory asgapminder_gdp_oceania.csv) into a variable calledamericasand display its summary statistics.Solution

To read in a CSV, we use

pd.read_csvand pass the filename'data/gapminder_gdp_americas.csv'to it. We also once again pass the column name'country'to the parameterindex_colin order to index by country. The summary statistics can be displayed with theDataFrame.describe()method.americas = pd.read_csv('data/gapminder_gdp_americas.csv', index_col='country') americas.describe()

Inspecting Data

After reading the data for the Americas, use

help(americas.head)andhelp(americas.tail)to find out whatDataFrame.headandDataFrame.taildo.

- What method call will display the first three rows of this data?

- What method call will display the last three columns of this data? (Hint: you may need to change your view of the data.)

Solution

We can check out the first five rows of

americasby executingamericas.head()(allowing us to view the head of the DataFrame). We can specify the number of rows we wish to see by specifying the parameternin our call toamericas.head(). To view the first three rows, execute:americas.head(n=3)continent gdpPercap_1952 gdpPercap_1957 gdpPercap_1962 \ country Argentina Americas 5911.315053 6856.856212 7133.166023 Bolivia Americas 2677.326347 2127.686326 2180.972546 Brazil Americas 2108.944355 2487.365989 3336.585802 gdpPercap_1967 gdpPercap_1972 gdpPercap_1977 gdpPercap_1982 \ country Argentina 8052.953021 9443.038526 10079.026740 8997.897412 Bolivia 2586.886053 2980.331339 3548.097832 3156.510452 Brazil 3429.864357 4985.711467 6660.118654 7030.835878 gdpPercap_1987 gdpPercap_1992 gdpPercap_1997 gdpPercap_2002 \ country Argentina 9139.671389 9308.418710 10967.281950 8797.640716 Bolivia 2753.691490 2961.699694 3326.143191 3413.262690 Brazil 7807.095818 6950.283021 7957.980824 8131.212843 gdpPercap_2007 country Argentina 12779.379640 Bolivia 3822.137084 Brazil 9065.800825To check out the last three rows of

americas, we would use the command,americas.tail(n=3), analogous tohead()used above. However, here we want to look at the last three columns so we need to change our view and then usetail(). To do so, we create a new DataFrame in which rows and columns are switched:americas_flipped = americas.TWe can then view the last three columns of

americasby viewing the last three rows ofamericas_flipped:americas_flipped.tail(n=3)country Argentina Bolivia Brazil Canada Chile Colombia \ gdpPercap_1997 10967.3 3326.14 7957.98 28954.9 10118.1 6117.36 gdpPercap_2002 8797.64 3413.26 8131.21 33329 10778.8 5755.26 gdpPercap_2007 12779.4 3822.14 9065.8 36319.2 13171.6 7006.58 country Costa Rica Cuba Dominican Republic Ecuador ... \ gdpPercap_1997 6677.05 5431.99 3614.1 7429.46 ... gdpPercap_2002 7723.45 6340.65 4563.81 5773.04 ... gdpPercap_2007 9645.06 8948.1 6025.37 6873.26 ... country Mexico Nicaragua Panama Paraguay Peru Puerto Rico \ gdpPercap_1997 9767.3 2253.02 7113.69 4247.4 5838.35 16999.4 gdpPercap_2002 10742.4 2474.55 7356.03 3783.67 5909.02 18855.6 gdpPercap_2007 11977.6 2749.32 9809.19 4172.84 7408.91 19328.7 country Trinidad and Tobago United States Uruguay Venezuela gdpPercap_1997 8792.57 35767.4 9230.24 10165.5 gdpPercap_2002 11460.6 39097.1 7727 8605.05 gdpPercap_2007 18008.5 42951.7 10611.5 11415.8This shows the data that we want, but we may prefer to display three columns instead of three rows, so we can flip it back:

americas_flipped.tail(n=3).TNote: we could have done the above in a single line of code by ‘chaining’ the commands:

americas.T.tail(n=3).T

Writing Data

As well as the

read_csvfunction for reading data from a file, Pandas provides ato_csvfunction to write dataframes to files. Applying what you’ve learned about reading from files, write one of your dataframes to a file calledprocessed.csv. You can usehelpto get information on how to useto_csv.Solution

In order to write the DataFrame

americasto a file calledprocessed.csv, execute the following command:americas.to_csv('processed.csv')For help on

to_csv, you could execute, for example:help(americas.to_csv)Note that

help(to_csv)throws an error! This is a subtlety and is due to the fact thatto_csvis NOT a function in and of itself and the actual call isamericas.to_csv.

Key Points

Use the Pandas library to get basic statistics out of tabular data.

Use

index_colto specify that a column’s values should be used as row headings.Use

DataFrame.infoto find out more about a dataframe.The

DataFrame.columnsvariable stores information about the dataframe’s columns.Use

DataFrame.Tto transpose a dataframe.Use

DataFrame.describeto get summary statistics about data.

Pandas DataFrames

Overview

Teaching: 15 min

Exercises: 15 minQuestions

How can I do statistical analysis of tabular data?

Objectives

Select individual values from a Pandas dataframe.

Select entire rows or entire columns from a dataframe.

Select a subset of both rows and columns from a dataframe in a single operation.

Select a subset of a dataframe by a single Boolean criterion.

Note about Pandas DataFrames/Series

A DataFrame is a collection of Series; The DataFrame is the way Pandas represents a table, and Series is the data-structure Pandas use to represent a column.

Pandas is built on top of the Numpy library, which in practice means that most of the methods defined for Numpy Arrays apply to Pandas Series/DataFrames.

What makes Pandas so attractive is the powerful interface to access individual records of the table, proper handling of missing values, and relational-databases operations between DataFrames.

Selecting values

To access a value at the position [i,j] of a DataFrame, we have two options, depending on

what is the meaning of i in use.

Remember that a DataFrame provides an index as a way to identify the rows of the table;

a row, then, has a position inside the table as well as a label, which

uniquely identifies its entry in the DataFrame.

Use DataFrame.iloc[..., ...] to select values by their (entry) position

- Can specify location by numerical index analogously to 2D version of character selection in strings.

import pandas as pd

data = pd.read_csv('data/gapminder_gdp_europe.csv', index_col='country')

print(data.iloc[0, 0])

1601.056136

Use DataFrame.loc[..., ...] to select values by their (entry) label.

- Can specify location by row name analogously to 2D version of dictionary keys.

print(data.loc["Albania", "gdpPercap_1952"])

1601.056136

Use : on its own to mean all columns or all rows.

- Just like Python’s usual slicing notation.

print(data.loc["Albania", :])

gdpPercap_1952 1601.056136

gdpPercap_1957 1942.284244

gdpPercap_1962 2312.888958

gdpPercap_1967 2760.196931

gdpPercap_1972 3313.422188

gdpPercap_1977 3533.003910

gdpPercap_1982 3630.880722

gdpPercap_1987 3738.932735

gdpPercap_1992 2497.437901

gdpPercap_1997 3193.054604

gdpPercap_2002 4604.211737

gdpPercap_2007 5937.029526

Name: Albania, dtype: float64

- Would get the same result printing

data.loc["Albania"](without a second index).

print(data.loc[:, "gdpPercap_1952"])

country

Albania 1601.056136

Austria 6137.076492

Belgium 8343.105127

⋮ ⋮ ⋮

Switzerland 14734.232750

Turkey 1969.100980

United Kingdom 9979.508487

Name: gdpPercap_1952, dtype: float64

- Would get the same result printing

data["gdpPercap_1952"] - Also get the same result printing

data.gdpPercap_1952(not recommended, because easily confused with.notation for methods)

Select multiple columns or rows using DataFrame.loc and a named slice.

print(data.loc['Italy':'Poland', 'gdpPercap_1962':'gdpPercap_1972'])

gdpPercap_1962 gdpPercap_1967 gdpPercap_1972

country

Italy 8243.582340 10022.401310 12269.273780

Montenegro 4649.593785 5907.850937 7778.414017

Netherlands 12790.849560 15363.251360 18794.745670

Norway 13450.401510 16361.876470 18965.055510

Poland 5338.752143 6557.152776 8006.506993

In the above code, we discover that slicing using loc is inclusive at both

ends, which differs from slicing using iloc, where slicing indicates

everything up to but not including the final index.

Result of slicing can be used in further operations.

- Usually don’t just print a slice.

- All the statistical operators that work on entire dataframes work the same way on slices.

- E.g., calculate max of a slice.

print(data.loc['Italy':'Poland', 'gdpPercap_1962':'gdpPercap_1972'].max())

gdpPercap_1962 13450.40151

gdpPercap_1967 16361.87647

gdpPercap_1972 18965.05551

dtype: float64

print(data.loc['Italy':'Poland', 'gdpPercap_1962':'gdpPercap_1972'].min())

gdpPercap_1962 4649.593785

gdpPercap_1967 5907.850937

gdpPercap_1972 7778.414017

dtype: float64

Use comparisons to select data based on value.

- Comparison is applied element by element.

- Returns a similarly-shaped dataframe of

TrueandFalse.

# Use a subset of data to keep output readable.

subset = data.loc['Italy':'Poland', 'gdpPercap_1962':'gdpPercap_1972']

print('Subset of data:\n', subset)

# Which values were greater than 10000 ?

print('\nWhere are values large?\n', subset > 10000)

Subset of data:

gdpPercap_1962 gdpPercap_1967 gdpPercap_1972

country

Italy 8243.582340 10022.401310 12269.273780

Montenegro 4649.593785 5907.850937 7778.414017

Netherlands 12790.849560 15363.251360 18794.745670

Norway 13450.401510 16361.876470 18965.055510

Poland 5338.752143 6557.152776 8006.506993

Where are values large?

gdpPercap_1962 gdpPercap_1967 gdpPercap_1972

country

Italy False True True

Montenegro False False False

Netherlands True True True

Norway True True True

Poland False False False

Select values or NaN using a Boolean mask.

- A frame full of Booleans is sometimes called a mask because of how it can be used.

- We can see the gdp values we have determined to be large:

mask = subset > 10000

print(subset[mask])

gdpPercap_1962 gdpPercap_1967 gdpPercap_1972

country

Italy NaN 10022.40131 12269.27378

Montenegro NaN NaN NaN

Netherlands 12790.84956 15363.25136 18794.74567

Norway 13450.40151 16361.87647 18965.05551

Poland NaN NaN NaN

- Get the value where the mask is true, and NaN (Not a Number) where it is false.

- Useful because NaNs are ignored by operations like max, min, average, etc.

print(subset[mask].describe())

gdpPercap_1962 gdpPercap_1967 gdpPercap_1972

count 2.000000 3.000000 3.000000

mean 13120.625535 13915.843047 16676.358320

std 466.373656 3408.589070 3817.597015

min 12790.849560 10022.401310 12269.273780

25% 12955.737547 12692.826335 15532.009725

50% 13120.625535 15363.251360 18794.745670

75% 13285.513523 15862.563915 18879.900590

max 13450.401510 16361.876470 18965.055510

Group By: split-apply-combine

Pandas methods and grouping operations give us flexibility in analyzing data.

For example, if we want to have a clearer view on how the European countries split themselves according to their GDP:

- We can split the countries in two groups during the years surveyed, those with a GDP higher than the European average and those with a lower GDP.

- Then we can estimate a wealthy score based on the historical values (from 1962 to 2007), to see how many times a country has participated in the groups of lower or higher GDP

mask_higher = data > data.mean()

wealth_score = mask_higher.aggregate('sum', axis=1) / len(data.columns)

wealth_score

country

Albania 0.000000

Austria 1.000000

Belgium 1.000000

Bosnia and Herzegovina 0.000000

Bulgaria 0.000000

Croatia 0.000000

Czech Republic 0.500000

Denmark 1.000000

Finland 1.000000

France 1.000000

Germany 1.000000

Greece 0.333333

Hungary 0.000000

Iceland 1.000000

Ireland 0.333333

Italy 0.500000

Montenegro 0.000000

Netherlands 1.000000

Norway 1.000000

Poland 0.000000

Portugal 0.000000

Romania 0.000000

Serbia 0.000000

Slovak Republic 0.000000

Slovenia 0.333333

Spain 0.333333

Sweden 1.000000

Switzerland 1.000000

Turkey 0.000000

United Kingdom 1.000000

dtype: float64

For each group in the wealth_score table (those never in the higher group,

those always in the higher group, etc.), we can sum their (financial) contribution

across the years surveyed (notice we are chaining calls to the groupby and sum methods):

data.groupby(wealth_score).sum()

gdpPercap_1952 gdpPercap_1957 gdpPercap_1962 gdpPercap_1967 \

0.000000 36916.854200 46110.918793 56850.065437 71324.848786

0.333333 16790.046878 20942.456800 25744.935321 33567.667670

0.500000 11807.544405 14505.000150 18380.449470 21421.846200

1.000000 104317.277560 127332.008735 149989.154201 178000.350040

gdpPercap_1972 gdpPercap_1977 gdpPercap_1982 gdpPercap_1987 \

0.000000 88569.346898 104459.358438 113553.768507 119649.599409

0.333333 45277.839976 53860.456750 59679.634020 64436.912960

0.500000 25377.727380 29056.145370 31914.712050 35517.678220

1.000000 215162.343140 241143.412730 263388.781960 296825.131210

gdpPercap_1992 gdpPercap_1997 gdpPercap_2002 gdpPercap_2007

0.000000 92380.047256 103772.937598 118590.929863 149577.357928

0.333333 67918.093220 80876.051580 102086.795210 122803.729520

0.500000 36310.666080 40723.538700 45564.308390 51403.028210

1.000000 315238.235970 346930.926170 385109.939210 427850.333420

Selection of Individual Values

Assume Pandas has been imported into your notebook and the Gapminder GDP data for Europe has been loaded:

import pandas as pd df = pd.read_csv('data/gapminder_gdp_europe.csv', index_col='country')Write an expression to find the Per Capita GDP of Serbia in 2007.

Solution

The selection can be done by using the labels for both the row (“Serbia”) and the column (“gdpPercap_2007”):

print(df.loc['Serbia', 'gdpPercap_2007'])The output is

9786.534714

Practice with Selection

Assume Pandas has been imported and the Gapminder GDP data for Europe has been loaded. Write an expression to select each of the following:

- GDP per capita for all countries in 1982.

- GDP per capita for Denmark for all years.

- GDP per capita for all countries for years after 1985.

Solution

1:

data['gdpPercap_1982']2:

data.loc['Denmark',:]3:

data.loc[:,'gdpPercap_1985':]Pandas is smart enough to recognize the number at the end of the column label and does not give you an error, although no column named

gdpPercap_1985actually exists. This is useful if new columns are added to the CSV file later.

Exploring available methods using the

dir()functionPython includes a

dir()function that can be used to display all of the available methods (functions) that are built into a data object. In Episode 4, we used some methods with a string. But we can see many more are available by usingdir():my_string = 'Hello world!' # creation of a string object dir(my_string)This command returns:

['__add__', ... '__subclasshook__', 'capitalize', 'casefold', 'center', ... 'upper', 'zfill']You can use

help()or Shift+Tab to get more information about what these methods do.Assume Pandas has been imported and the Gapminder GDP data for Europe has been loaded as

data. Then, usedir()to find the function that prints out the median per-capita GDP across all European countries for each year that information is available.Solution

Among many choices,

dir()lists themedian()function as a possibility. Thus,data.median()

Key Points

Use

DataFrame.iloc[..., ...]to select values by integer location.Use

:on its own to mean all columns or all rows.Select multiple columns or rows using

DataFrame.locand a named slice.Result of slicing can be used in further operations.

Use comparisons to select data based on value.

Select values or NaN using a Boolean mask.

Plotting

Overview

Teaching: 15 min

Exercises: 15 minQuestions

How can I plot my data?

How can I save my plot for publishing?

Objectives

Create a time series plot showing a single data set.

Create a scatter plot showing relationship between two data sets.

matplotlib is the most widely used scientific plotting library in Python.

- Commonly use a sub-library called

matplotlib.pyplot. - The Jupyter Notebook will render plots inline by default.

import matplotlib.pyplot as plt

- Simple plots are then (fairly) simple to create.

time = [0, 1, 2, 3]

position = [0, 100, 200, 300]

plt.plot(time, position)

plt.xlabel('Time (hr)')

plt.ylabel('Position (km)')

Display All Open Figures

In our Jupyter Notebook example, running the cell should generate the figure directly below the code. The figure is also included in the Notebook document for future viewing. However, other Python environments like an interactive Python session started from a terminal or a Python script executed via the command line require an additional command to display the figure.

Instruct

matplotlibto show a figure:plt.show()This command can also be used within a Notebook - for instance, to display multiple figures if several are created by a single cell.

Plot data directly from a Pandas dataframe.

- We can also plot Pandas dataframes.

- This implicitly uses

matplotlib.pyplot. - Before plotting, we convert the column headings from a

stringtointegerdata type, since they represent numerical values

import pandas as pd

data = pd.read_csv('data/gapminder_gdp_oceania.csv', index_col='country')

# Extract year from last 4 characters of each column name

# The current column names are structured as 'gdpPercap_(year)',

# so we want to keep the (year) part only for clarity when plotting GDP vs. years

# To do this we use strip(), which removes from the string the characters stated in the argument

# This method works on strings, so we call str before strip()

years = data.columns.str.strip('gdpPercap_')

# Convert year values to integers, saving results back to dataframe

data.columns = years.astype(int)

data.loc['Australia'].plot()

Select and transform data, then plot it.

- By default,

DataFrame.plotplots with the rows as the X axis. - We can transpose the data in order to plot multiple series.

data.T.plot()

plt.ylabel('GDP per capita')

Many styles of plot are available.

- For example, do a bar plot using a fancier style.

plt.style.use('ggplot')

data.T.plot(kind='bar')

plt.ylabel('GDP per capita')

Data can also be plotted by calling the matplotlib plot function directly.

- The command is

plt.plot(x, y) - The color and format of markers can also be specified as an additional optional argument e.g.,

b-is a blue line,g--is a green dashed line.

Get Australia data from dataframe

years = data.columns

gdp_australia = data.loc['Australia']

plt.plot(years, gdp_australia, 'g--')

Can plot many sets of data together.

# Select two countries' worth of data.

gdp_australia = data.loc['Australia']

gdp_nz = data.loc['New Zealand']

# Plot with differently-colored markers.

plt.plot(years, gdp_australia, 'b-', label='Australia')

plt.plot(years, gdp_nz, 'g-', label='New Zealand')

# Create legend.

plt.legend(loc='upper left')

plt.xlabel('Year')

plt.ylabel('GDP per capita ($)')

Adding a Legend

Often when plotting multiple datasets on the same figure it is desirable to have a legend describing the data.

This can be done in

matplotlibin two stages:

- Provide a label for each dataset in the figure:

plt.plot(years, gdp_australia, label='Australia') plt.plot(years, gdp_nz, label='New Zealand')

- Instruct

matplotlibto create the legend.plt.legend()By default matplotlib will attempt to place the legend in a suitable position. If you would rather specify a position this can be done with the

loc=argument, e.g to place the legend in the upper left corner of the plot, specifyloc='upper left'

Saving your plot to a file

If you are satisfied with the plot you see you may want to save it to a file, perhaps to include it in a publication. There is a function in the matplotlib.pyplot module that accomplishes this: savefig.

The file format saved will automatically be deduced from the file name extension of the file name argument you call savefig with (png, pdf, ps, eps and svg).

Note that functions in

pltrefer to a global figure variable and after a figure has been displayed to the screen (e.g. withplt.show) matplotlib will make this variable refer to a new empty figure. Therefore, make sure you callplt.savefigbefore the plot is displayed to the screen, otherwise you may find a file with an empty plot.When using dataframes, data is often generated and plotted to screen in one line, and

plt.savefigseems not to be a possible approach. One possibility to save the figure to file is then to

- save a reference to the current figure in a local variable (with

plt.gcf)- call the

savefigclass method from that variable.- Modifying our previous example to save the figure:

# Select two countries' worth of data. gdp_australia = data.loc['Australia'] gdp_nz = data.loc['New Zealand'] # Get a reference to the current figure. fig = plt.gcf() # Plot with differently-colored markers. plt.plot(years, gdp_australia, 'b-', label='Australia') plt.plot(years, gdp_nz, 'g-', label='New Zealand') # Create legend. plt.legend(loc='upper left') plt.xlabel('Year') plt.ylabel('GDP per capita ($)') # Save the figure to a file. fig.savefig('oceania.png')

- Plot a scatter plot correlating the GDP of Australia and New Zealand

- Use either

plt.scatterorDataFrame.plot.scatter

plt.scatter(gdp_australia, gdp_nz)

data.T.plot.scatter(x = 'Australia', y = 'New Zealand')

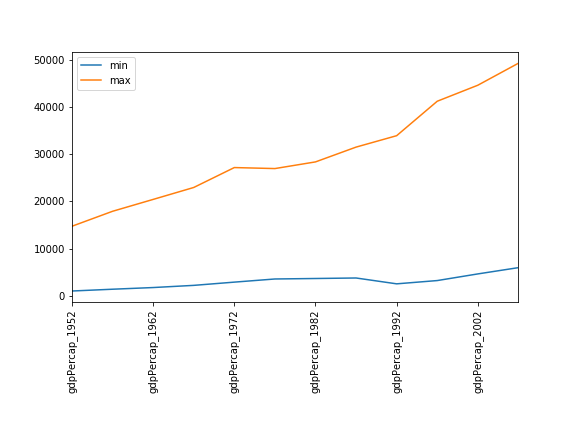

Minima and Maxima

Fill in the blanks below to plot the minimum GDP per capita over time for all the countries in Europe. Modify it again to plot the maximum GDP per capita over time for Europe.

data_europe = pd.read_csv('data/gapminder_gdp_europe.csv', index_col='country') data_europe.____.plot(label='min') data_europe.____ plt.legend(loc='best') plt.xticks(rotation=90)Solution

data_europe = pd.read_csv('data/gapminder_gdp_europe.csv', index_col='country') data_europe.min().plot(label='min') data_europe.max().plot(label='max') plt.legend(loc='best') plt.xticks(rotation=90)

Correlations

Modify the example in the notes to create a scatter plot showing the relationship between the minimum and maximum GDP per capita among the countries in Asia for each year in the data set. What relationship do you see (if any)?

Solution

data_asia = pd.read_csv('data/gapminder_gdp_asia.csv', index_col='country') data_asia.describe().T.plot(kind='scatter', x='min', y='max')

No particular correlations can be seen between the minimum and maximum gdp values year on year. It seems the fortunes of asian countries do not rise and fall together.

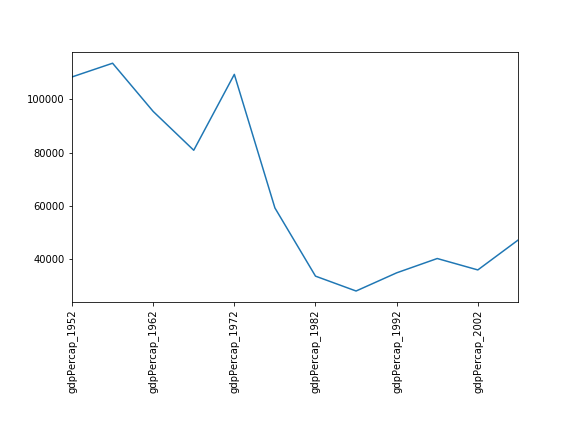

You might note that the variability in the maximum is much higher than that of the minimum. Take a look at the maximum and the max indexes:

data_asia = pd.read_csv('data/gapminder_gdp_asia.csv', index_col='country') data_asia.max().plot() print(data_asia.idxmax()) print(data_asia.idxmin())Solution

Seems the variability in this value is due to a sharp drop after 1972. Some geopolitics at play perhaps? Given the dominance of oil producing countries, maybe the Brent crude index would make an interesting comparison? Whilst Myanmar consistently has the lowest gdp, the highest gdb nation has varied more notably.

More Correlations

This short program creates a plot showing the correlation between GDP and life expectancy for 2007, normalizing marker size by population:

data_all = pd.read_csv('data/gapminder_all.csv', index_col='country') data_all.plot(kind='scatter', x='gdpPercap_2007', y='lifeExp_2007', s=data_all['pop_2007']/1e6)Using online help and other resources, explain what each argument to

plotdoes.Solution

A good place to look is the documentation for the plot function - help(data_all.plot).

kind - As seen already this determines the kind of plot to be drawn.

x and y - A column name or index that determines what data will be placed on the x and y axes of the plot

s - Details for this can be found in the documentation of plt.scatter. A single number or one value for each data point. Determines the size of the plotted points.

Making your plots accessible

Whenever you are generating plots to go into a paper or a presentation, there are a few things you can do to make sure that everyone can understand your plots.

- Always make sure your text is large enough to read. Use the

fontsizeparameter inxlabel,ylabel,title, andlegend, andtick_paramswithlabelsizeto increase the text size of the numbers on your axes.- Similarly, you should make your graph elements easy to see. Use

sto increase the size of your scatterplot markers andlinewidthto increase the sizes of your plot lines.- Using color (and nothing else) to distinguish between different plot elements will make your plots unreadable to anyone who is colorblind, or who happens to have a black-and-white office printer. For lines, the

linestyleparameter lets you use different types of lines. For scatterplots,markerlets you change the shape of your points. If you’re unsure about your colors, you can use Coblis or Color Oracle to simulate what your plots would look like to those with colorblindness.

Key Points

matplotlibis the most widely used scientific plotting library in Python.Plot data directly from a Pandas dataframe.

Select and transform data, then plot it.

Many styles of plot are available: see the Python Graph Gallery for more options.

Can plot many sets of data together.

Lunch

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Objectives

Over lunch, reflect on and discuss the following:

- What sort of packages might you use in Python and why would you use them?

- How would data need to be formatted to be used in Pandas data frames? Would the data you have meet these requirements?

- What limitations or problems might you run into when thinking about how to apply what we’ve learned to your own projects or data?

Key Points

Lists

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How can I store multiple values?

Objectives

Explain why programs need collections of values.

Write programs that create flat lists, index them, and modify them through assignment and method calls.

We have been working with one dataset at a time. With programming however, we have the ability to reproduce the same work on multiple sets of data. Let’s look at some concepts that will allow us to later group together a collection of file names for us to repeat analysis on.

A list stores many values in a single structure.

- Doing calculations with a hundred variables called

pressure_001,pressure_002, etc., would be at least as slow as doing them by hand. - Use a list to store many values together.

- Contained within square brackets

[...]. - Values separated by commas

,.

- Contained within square brackets

- Use

lento find out how many values are in a list.

pressures = [0.273, 0.275, 0.277, 0.275, 0.276]

print('pressures:', pressures)

print('length:', len(pressures))

pressures: [0.273, 0.275, 0.277, 0.275, 0.276]

length: 5

Use an item’s index to fetch it from a list.

- First item starts at 0.

- Items can be referenced counting from the end with negative numbers.

print('first item:', pressures[0])

print('fifth item:', pressures[4])

print('last item:', pressures[-1])

zeroth item: 0.273

fourth item: 0.276

last item: 0.276

Indexing beyond the end of the collection is an error.

- Python reports an

IndexErrorif we attempt to access a value that doesn’t exist.- This is a kind of runtime error.

- Cannot be detected as the code is parsed because the index might be calculated based on data.

print('100th item:', pressures[99])

IndexError: string index out of range

Lists’ values can be replaced by assigning to them.

- Use an index expression on the left of assignment to replace a value.

pressures[0] = 0.265

print('pressures is now:', pressures)

pressures is now: [0.265, 0.275, 0.277, 0.275, 0.276]

Appending items to a list lengthens it.

- Use

list_name.appendto add items to the end of a list.

pressures.append(0.269)

print('pressures:', pressures)

pressures: [0.265, 0.275, 0.277, 0.275, 0.276, 0.269]

appendis a method of lists.- Like a function, but tied to a particular object.

- Use

object_name.method_nameto call methods.- Deliberately resembles the way we refer to things in a library.

- There are many other methods of lists.

- Use

help(list)to view.

- Use

Key Points

A list stores many values in a single structure.

Use an item’s index to fetch it from a list.

Lists’ values can be replaced by assigning to them.

Appending items to a list lengthens it.

Indexing beyond the end of the collection is an error.

For Loops

Overview

Teaching: 10 min

Exercises: 15 minQuestions

How can I make a program do many things?

Objectives

Explain what for loops are normally used for.

A for loop executes commands once for each value in a collection.

- Doing calculations on the values in a list one by one

is as painful as working with

pressure_001,pressure_002, etc. - A for loop tells Python to execute some statements once for each value in a list, a character string, or some other collection.

- “for each thing in this group, do these operations”

for number in [2, 3, 5]:

print(number)

- This

forloop is equivalent to:

print(2)

print(3)

print(5)

- And the

forloop’s output is:

2

3

5

A for loop is made up of a collection, a loop variable, and a body.

for number in [2, 3, 5]:

print(number)

- The collection,

[2, 3, 5], is what the loop is being run on. - The body,

print(number), specifies what to do for each value in the collection. - The loop variable,

number, is what changes for each iteration of the loop.- The “current thing”.

The first line of the for loop must end with a colon, and the body must be indented.

- The colon at the end of the first line signals the start of a block of statements.

- Python uses indentation rather than

{}orbegin/endto show nesting.- Any consistent indentation is legal, but almost everyone uses four spaces.

for number in [2, 3, 5]:

print(number)

IndentationError: expected an indented block

- Indentation is always meaningful in Python.

firstName = "Jon"

lastName = "Smith"

File "<ipython-input-7-f65f2962bf9c>", line 2

lastName = "Smith"

^

IndentationError: unexpected indent

- This error can be fixed by removing the extra spaces at the beginning of the second line.

Loop variables can be called anything.

- As with all variables, loop variables are:

- Created on demand.

- Meaningless: their names can be anything at all.

for kitten in [2, 3, 5]:

print(kitten)

The body of a loop can contain many statements.

- But no loop should be more than a few lines long.

- Hard for human beings to keep larger chunks of code in mind.

for number in [2, 3, 5]:

squared = number ** 2

print(number, squared)

2 4

3 9

5 25

Reversing a String

Fill in the blanks in the program below so that it prints “nit” (the reverse of the original character string “tin”).

original = "tin" result = ____ for char in original: result = ____ print(result)Solution

original = "tin" result = "" for char in original: result = char + result print(result)

Practice Accumulating

Fill in the blanks in each of the programs below to produce the indicated result.

# Total length of the strings in the list: ["red", "green", "blue"] => 12 total = 0 for word in ["red", "green", "blue"]: ____ = ____ + len(word) print(total)Solution

total = 0 for word in ["red", "green", "blue"]: total = total + len(word) print(total)# List of word lengths: ["red", "green", "blue"] => [3, 5, 4] lengths = ____ for word in ["red", "green", "blue"]: lengths.____(____) print(lengths)Solution

lengths = [] for word in ["red", "green", "blue"]: lengths.append(len(word)) print(lengths)# Concatenate all words: ["red", "green", "blue"] => "redgreenblue" words = ["red", "green", "blue"] result = ____ for ____ in ____: ____ print(result)Solution

words = ["red", "green", "blue"] result = "" for word in words: result = result + word print(result)

Cumulative Sum

Reorder and properly indent the lines of code below so that they print a list with the cumulative sum of data. The result should be

[1, 3, 5, 10].cumulative.append(total) for number in data: cumulative = [] total = total + number total = 0 print(cumulative) data = [1,2,2,5]Solution

total = 0 data = [1,2,2,5] cumulative = [] for number in data: total = total + number cumulative.append(total) print(cumulative)

Key Points

A for loop executes commands once for each value in a collection.

A

forloop is made up of a collection, a loop variable, and a body.The first line of the

forloop must end with a colon, and the body must be indented.Indentation is always meaningful in Python.

Loop variables can be called anything (but it is strongly advised to have a meaningful name to the looping variable).

The body of a loop can contain many statements.

Looping Over Data Sets

Overview

Teaching: 5 min

Exercises: 10 minQuestions

How can I process many data sets with a single command?

Objectives

Be able to read and write globbing expressions that match sets of files.

Use glob to create lists of files.

Write for loops to perform operations on files given their names in a list.

Use a for loop to process files given a list of their names.

- A filename is a character string.

- And lists can contain character strings.

import pandas as pd

for filename in ['data/gapminder_gdp_africa.csv', 'data/gapminder_gdp_asia.csv']:

data = pd.read_csv(filename, index_col='country')

print(filename)

print(data.min())

data/gapminder_gdp_africa.csv gdpPercap_1952 298.846212

gdpPercap_1957 335.997115

gdpPercap_1962 355.203227

gdpPercap_1967 412.977514

⋮ ⋮ ⋮

gdpPercap_1997 312.188423

gdpPercap_2002 241.165877

gdpPercap_2007 277.551859

dtype: float64

data/gapminder_gdp_asia.csv gdpPercap_1952 331

gdpPercap_1957 350

gdpPercap_1962 388

gdpPercap_1967 349

⋮ ⋮ ⋮

gdpPercap_1997 415

gdpPercap_2002 611

gdpPercap_2007 944

dtype: float64

Use glob.glob to find sets of files whose names match a pattern.

- In Unix, the term “globbing” means “matching a set of files with a pattern”.

- The most common patterns are:

*meaning “match zero or more characters”?meaning “match exactly one character”

- Python’s standard library contains the

globmodule to provide pattern matching functionality - The

globmodule contains a function also calledglobto match file patterns - E.g.,

glob.glob('*.csv')matches all files in the current directory whose names end with.csv.

import glob

print('all csv files in data directory:', glob.glob('data/*.csv'))

all csv files in data directory: ['data/gapminder_all.csv', 'data/gapminder_gdp_africa.csv', \

'data/gapminder_gdp_americas.csv', 'data/gapminder_gdp_asia.csv', 'data/gapminder_gdp_europe.csv', \

'data/gapminder_gdp_oceania.csv']

Use glob and for to process batches of files.

- Helps a lot if the files are named and stored systematically and consistently so that simple patterns will find the right data.

for filename in glob.glob('data/gapminder_*.csv'):

data = pd.read_csv(filename)

print(filename, data['gdpPercap_1952'].min())

data/gapminder_all.csv 298.8462121

data/gapminder_gdp_africa.csv 298.8462121

data/gapminder_gdp_americas.csv 1397.717137

data/gapminder_gdp_asia.csv 331.0

data/gapminder_gdp_europe.csv 973.5331948

data/gapminder_gdp_oceania.csv 10039.59564

- This includes all data, as well as per-region data.

- Use a more specific pattern in the exercises to exclude the whole data set.

- But note that the minimum of the entire data set is also the minimum of one of the data sets, which is a nice check on correctness.

Comparing Data

Write a program that reads in the regional data sets and plots the average GDP per capita for each region over time in a single chart.

Solution

import glob import pandas as pd import matplotlib.pyplot as plt fig, ax = plt.subplots(1,1) for filename in glob.glob('data/gapminder_gdp*.csv'): dataframe = pd.read_csv(filename) dataframe.mean().plot(ax=ax, label=filename) plt.legend() plt.show()

Key Points

Use a

forloop to process files given a list of their names.Use

glob.globto find sets of files whose names match a pattern.Use

globandforto process batches of files.

Conditionals

Overview

Teaching: 10 min

Exercises: 15 minQuestions

How can programs do different things for different data?

Objectives

Correctly write programs that use if and else statements and simple Boolean expressions (without logical operators).

Use if statements to control whether or not a block of code is executed.

- An

ifstatement (more properly called a conditional statement) controls whether some block of code is executed or not. - Structure is similar to a

forstatement:- First line opens with

ifand ends with a colon - Body containing one or more statements is indented (usually by 4 spaces)

- First line opens with

mass = 3.54

if mass > 3.0:

print(mass, 'is large')

mass = 2.07

if mass > 3.0:

print (mass, 'is large')

3.54 is large

Conditionals are often used inside loops.

- Not much point using a conditional when we know the value (as above).

- But useful when we have a collection to process.

masses = [3.54, 2.07, 9.22, 1.86, 1.71]

for m in masses:

if m > 3.0:

print(m, 'is large')

3.54 is large

9.22 is large

Use else to execute a block of code when an if condition is not true.

elsecan be used following anif.- Allows us to specify an alternative to execute when the

ifbranch isn’t taken.

masses = [3.54, 2.07, 9.22, 1.86, 1.71]

for m in masses:

if m > 3.0:

print(m, 'is large')

else:

print(m, 'is small')

3.54 is large

2.07 is small

9.22 is large

1.86 is small

1.71 is small

Use elif to specify additional tests.

- May want to provide several alternative choices, each with its own test.

- Use

elif(short for “else if”) and a condition to specify these. - Always associated with an

if. - Must come before the

else(which is the “catch all”).

masses = [3.54, 2.07, 9.22, 1.86, 1.71]

for m in masses:

if m > 9.0:

print(m, 'is HUGE')

elif m > 3.0:

print(m, 'is large')

else:

print(m, 'is small')

3.54 is large

2.07 is small

9.22 is HUGE

1.86 is small

1.71 is small

Compound Relations Using

andororOften, you want some combination of things to be true. You can combine relations within a conditional using

andandor. Let’s look at an example with our gapminder data in mind to calculate what quartile a given life expectancy value will fall into.expectancies = [62.5, 57.9, 81.0, -1] for exp in expectancies: if exp > 0 and exp < 58.41: # This observation is in the first quartile quartile = 1 elif exp >= 58.41 and exp < 67.05: # This observation is in the second quartile quartile = 2 elif exp >= 67.05 and exp < 71.70: # This observation is in the third quartile quartile = 3 elif exp >= 71.70: # This observation is in the fourth quartile quartile = 4 else: # This observation has bad data quartile = None print('life expectancy', exp, 'is in quartile', quartile)life expectancy 62.5 is in quartile 2 life expectancy 57.9 is in quartile 1 liife expectancy 81.0 is in quartile 4 life expectancy -1 is in quartile None

Processing Small Files

Modify this program so that it only processes files with fewer than 50 records.

import glob import pandas as pd for filename in glob.glob('data/*.csv'): contents = pd.read_csv(filename) ____: print(filename, len(contents))Solution

import glob import pandas as pd for filename in glob.glob('data/*.csv'): contents = pd.read_csv(filename) if len(contents) < 50: print(filename, len(contents))

Key Points

Use

ifstatements to control whether or not a block of code is executed.Conditionals are often used inside loops.

Use

elseto execute a block of code when anifcondition is not true.Use

elifto specify additional tests.

Afternoon Coffee

Overview

Teaching: 0 min

Exercises: 0 minQuestions

Objectives

Reflection exercise

Over break, reflect on and discuss the following: